Convolutional Neural Network (CNN) - 5KK73 GPU Assignment 2013 | Deep learning, Big data technologies, Learning

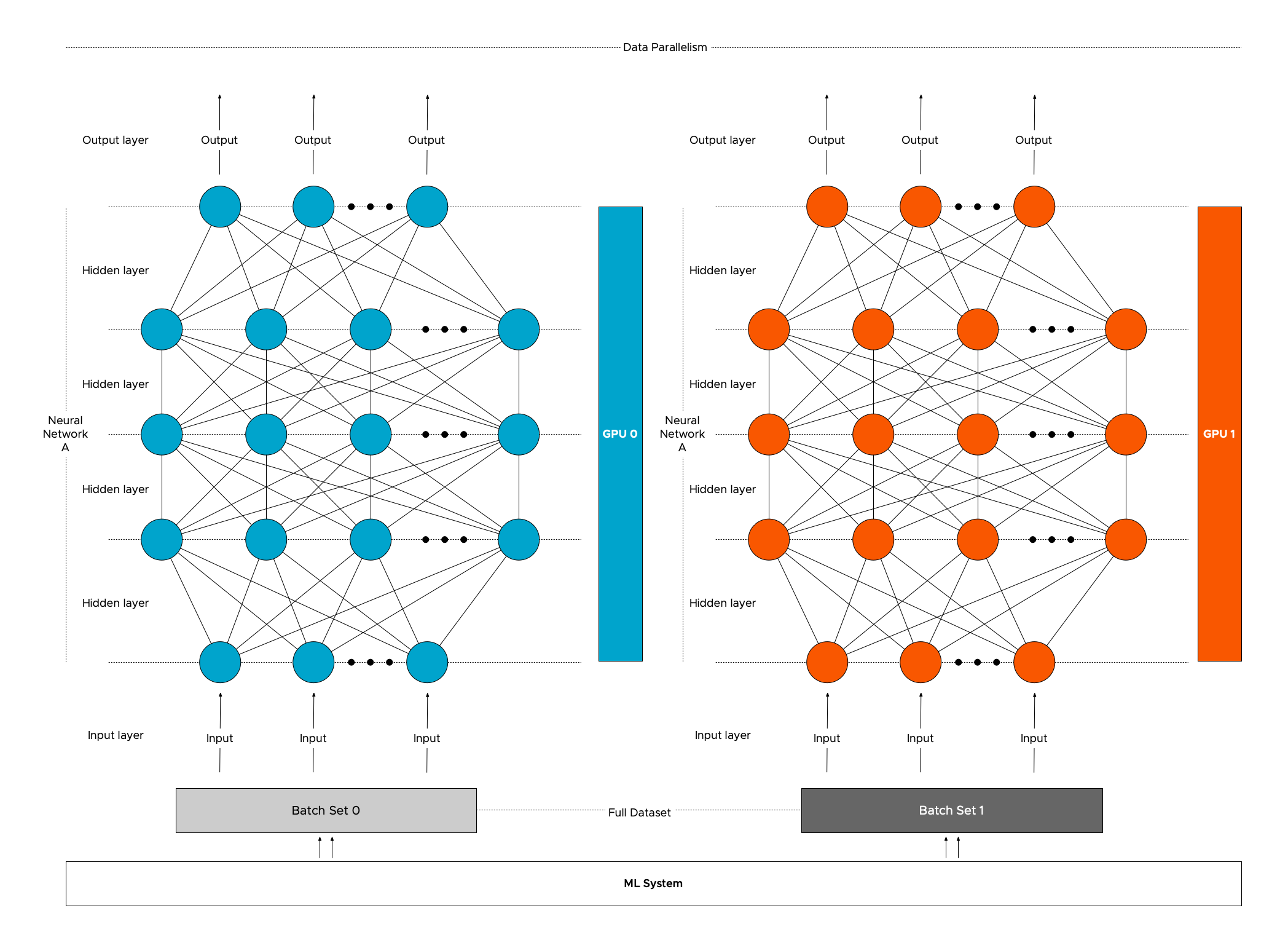

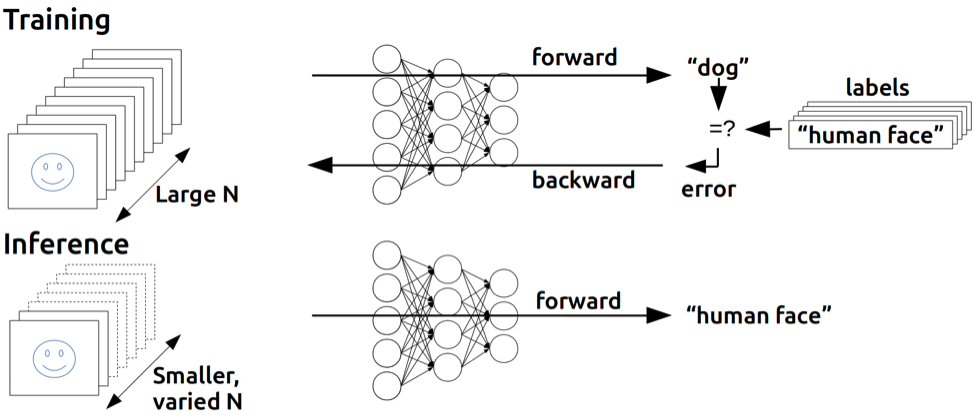

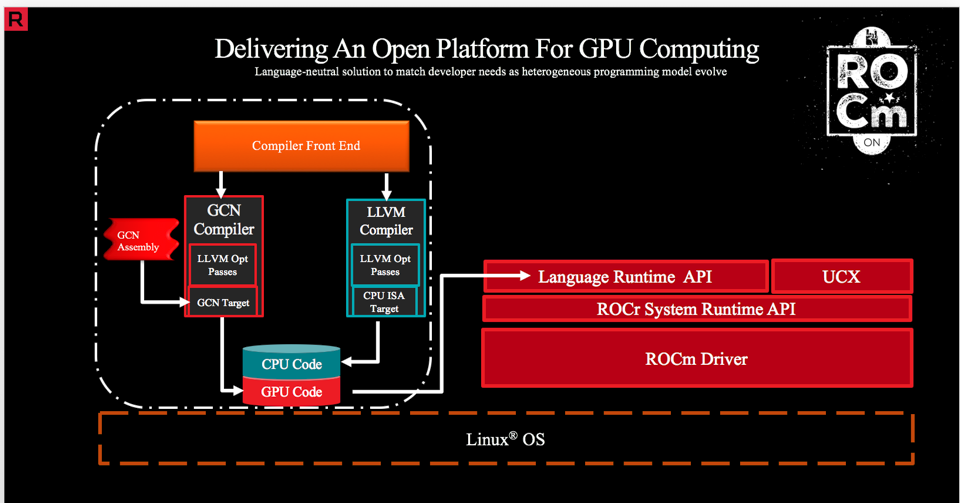

PARsE | Education | GPU Cluster | Efficient mapping of the training of Convolutional Neural Networks to a CUDA-based cluster

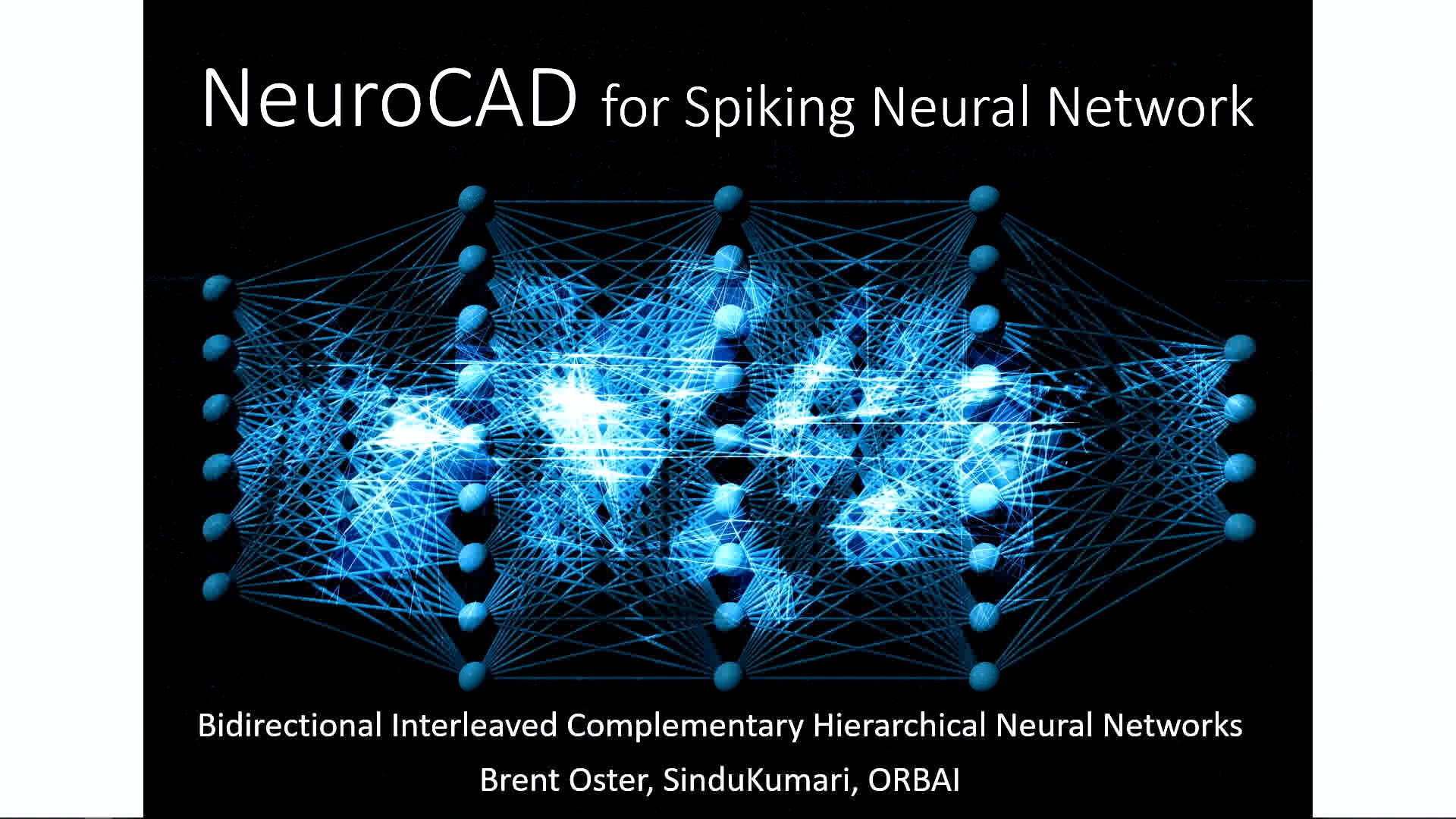

GTC Silicon Valley-2019: Training Spiking Neural Networks on GPUs with Bidirectional Interleaved Complementary Hierarchical Networks | NVIDIA Developer

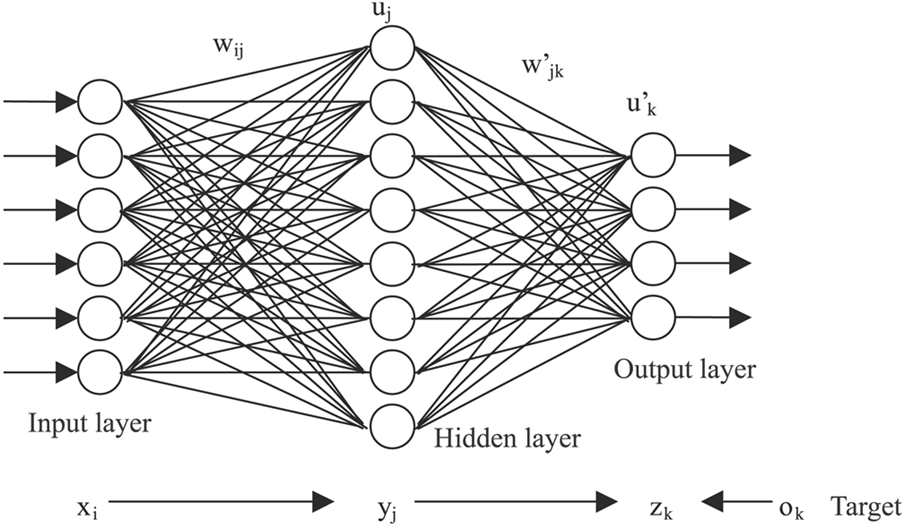

If I'm building a deep learning neural network with a lot of computing power to learn, do I need more memory, CPU or GPU? - Quora

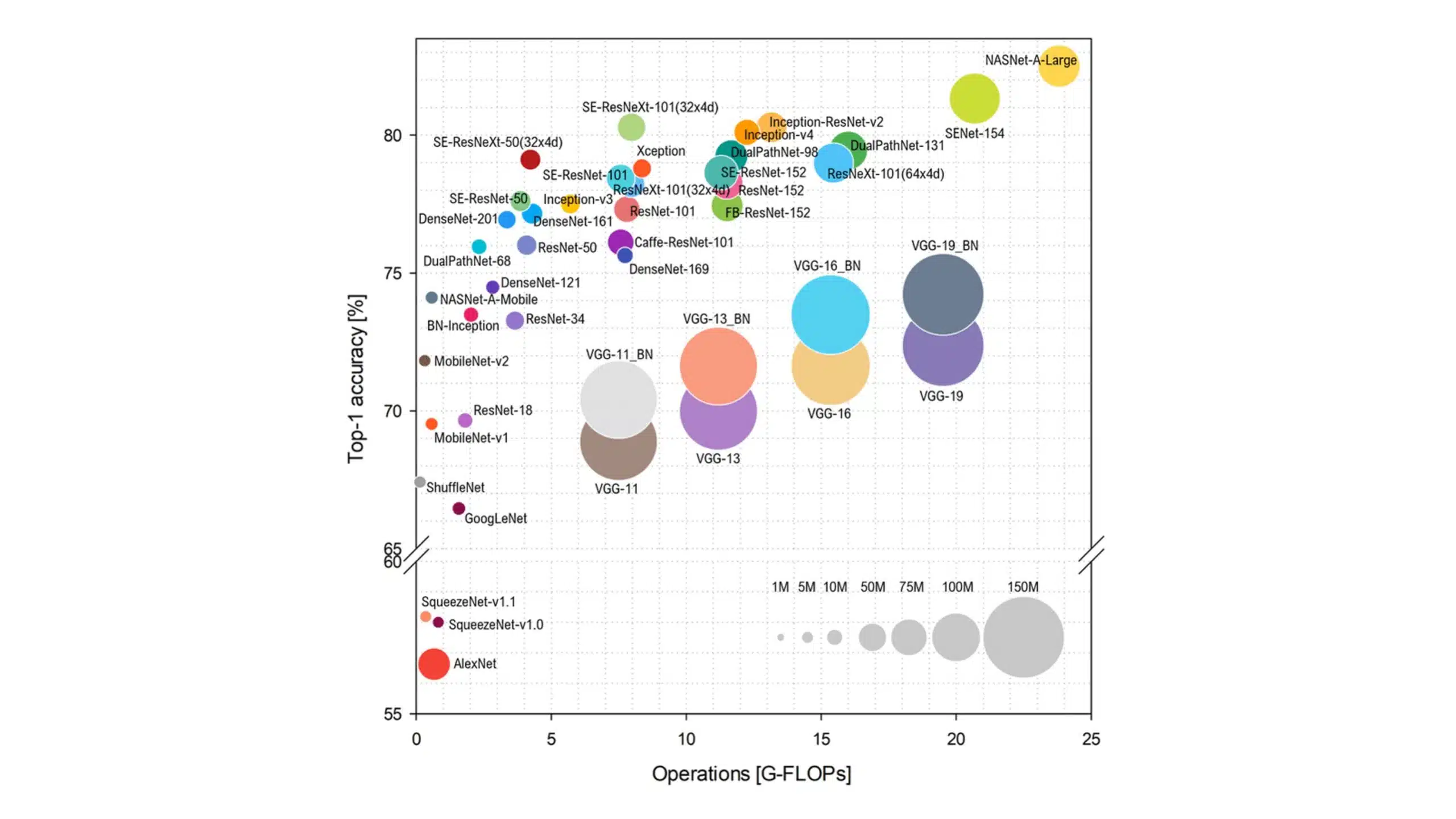

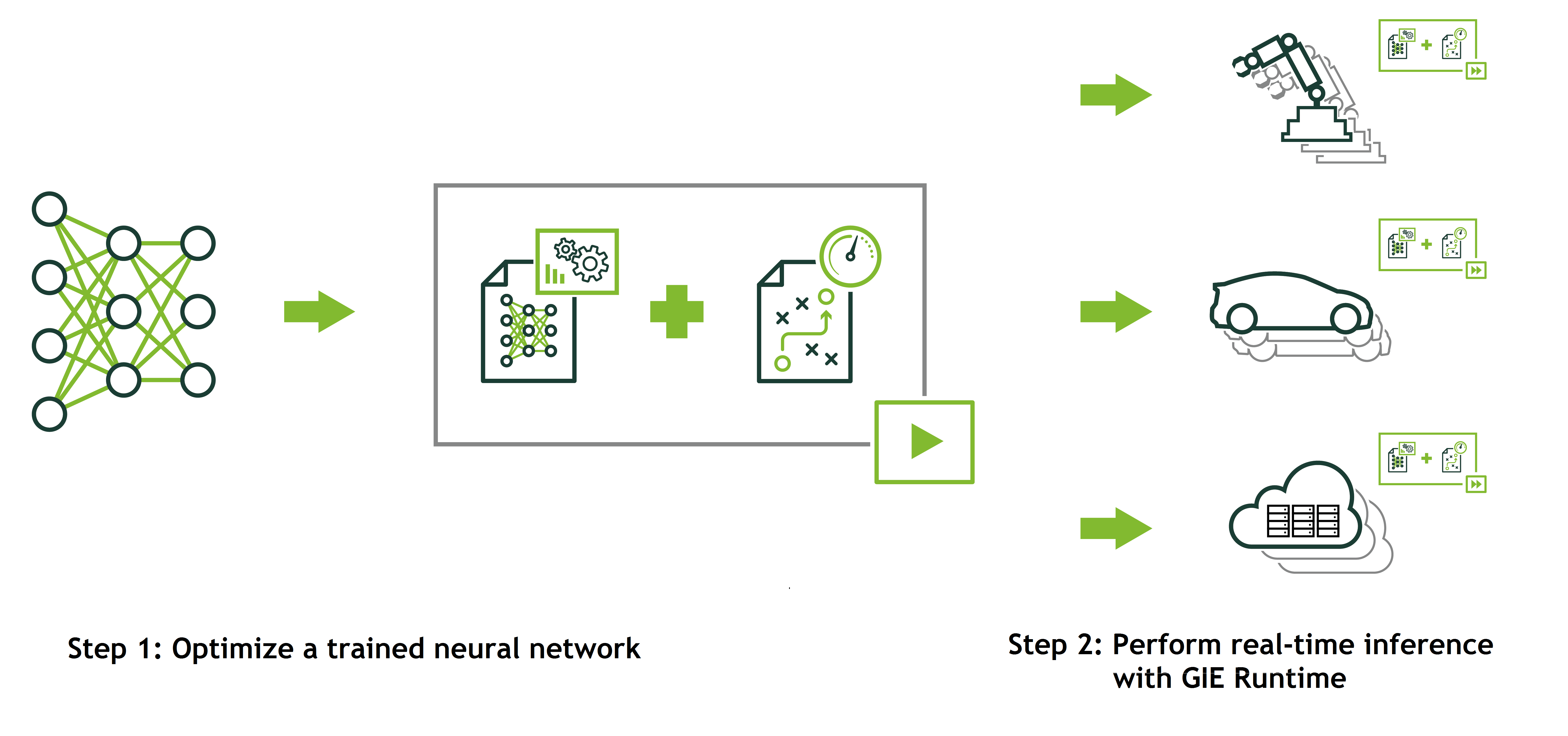

Discovering GPU-friendly Deep Neural Networks with Unified Neural Architecture Search | NVIDIA Technical Blog

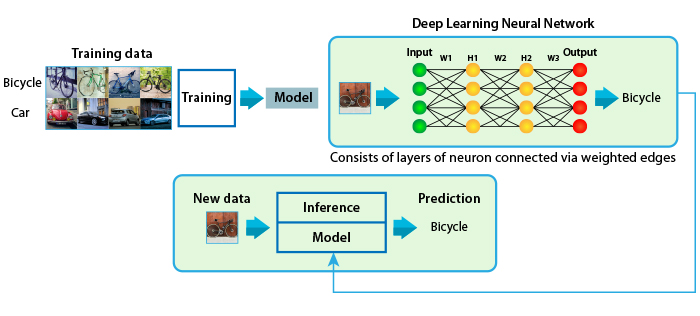

Did Nvidia Just Demo SkyNet on GTC 2014? - Neural Net Based "Machine Learning" Intelligence Explored

Energy-friendly chip can perform powerful artificial-intelligence tasks | MIT News | Massachusetts Institute of Technology

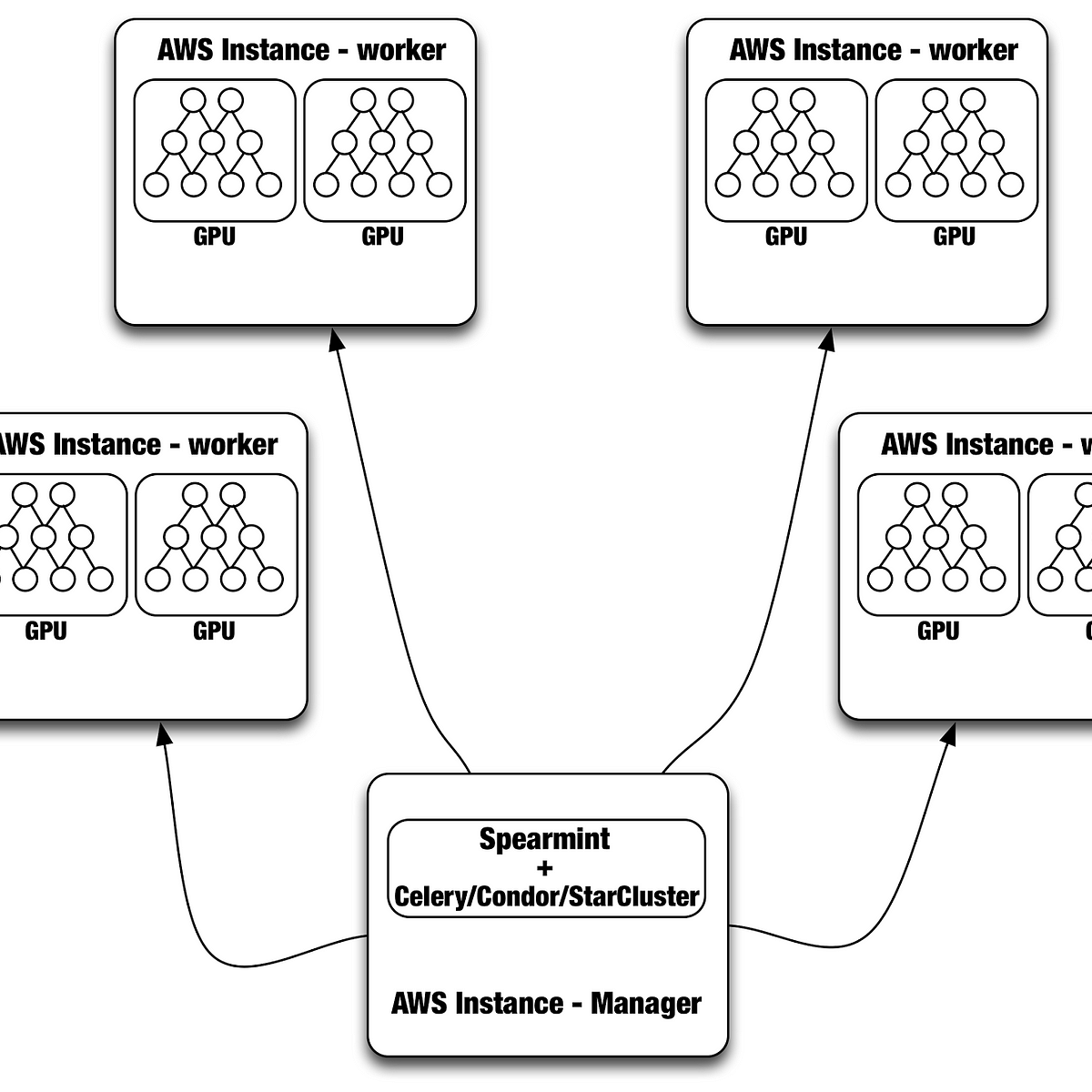

Distributed Neural Networks with GPUs in the AWS Cloud | by Netflix Technology Blog | Netflix TechBlog